The Four Shapes of Prompting: How AI Interaction Evolves

When people think about prompting, they usually picture a chat box aspects.

You type something, the model replies to you.

But prompting is far more than a conversation interface. Prompting is the core interaction layer between humans and models, the bridge that defines how intelligence is used.

Depending on the level of maturity, prompting can take very different shapes: from free-form chat messages to embedded, self-optimizing code components.

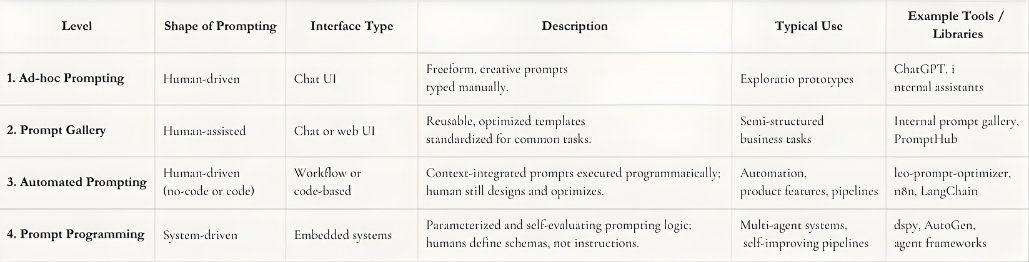

At Sailpeak, we typically describe four progressive shapes of prompting, aligned with how organizations evolve their GenAI capabilities:

- Ad-hoc Prompting: freeform human exploration

- Prompt Galleries: reusable, optimized templates

- Automated Prompting: contextual and optimized execution (no-code or code)

- Prompt Programming: autonomous, parameterized systems

Each level represents a step forward in control, reliability, and scalability, moving from experimentation to product-grade prompting.

1. Ad-hoc Prompting: Exploration and Discovery

This is where everyone starts. A user interacts directly with a model through a chat interface, typing instructions, trying examples, and refining manually until the output looks right.

At this stage, prompting is creative and human-driven. It is perfect for:

- Experimenting with model capabilities

- One-off tasks (summarization, rewriting, ideation)

- Rapid prototyping

However, it is also fragile. Prompts are rarely standardized, and results vary across users. In organizations, this often leads to what we call prompt chaos, with hundreds of slightly different versions for the same task.

That is why the next step is to make prompting consistent and shareable.

2. Prompt Galleries: From Art to Repeatability

Once teams find effective prompts, they start collecting and reusing them.

A Prompt Gallery is a structured library of tested, optimized prompts for recurring tasks.

At this level, prompting remains user-facing, but quality and structure improve dramatically.

Users can select a pre-approved “recipe” instead of retyping or reinventing one.

For example, an internal compliance assistant could include a prompt such as:

“Summarize this internal policy in less than 200 words, preserving all compliance-related clauses.”

Each employee uses the same template, ensuring consistency, accuracy, and tone.

Prompt galleries are the first form of prompt governance, bridging the gap between human creativity and organizational standardization.

3. Automated Prompting: Context-Driven and Optimized Workflows

When prompts start automating repetitive, context-rich tasks, they evolve into automated prompting.

Here, prompting is not only standardized; it is integrated into workflows and environments, both no-code and code-based.

Instead of a user manually sending inputs, a workflow fills the prompt dynamically with real-time variables:

prompt = f"""

Summarize the latest compliance incident report.

Focus on: {incident_category}, {risk_level}, and {impact_scope}.

Include recommendations aligned with internal policy {policy_version}.

"""

This approach can live anywhere: in an n8n workflow, an internal automation platform, or a Python pipeline.

What matters is that prompts are executed systematically with the right context.

In automation, prompting becomes functional rather than conversational.

To ensure quality and reliability, teams can leverage optimization tools such as leo-prompt-optimizer (3,700+ downloads on PyPI).

This library helps teams iteratively test prompt variants, measure performance, and select the most efficient version, whether that prompt will run in a notebook, a production API, or a no-code flow.

In this model, humans remain the drivers. They design, optimize, and deploy the prompts, but automation and context integration make them scalable and reproducible.

This level represents the bridge between creativity and engineering discipline. You own the prompt, you test it, and you decide how and where to deploy it.

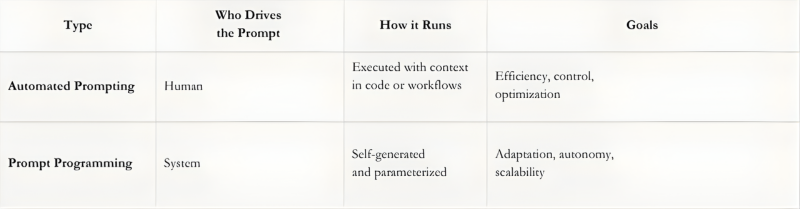

4. Prompt Programming: Autonomous and Parameterized Systems

Prompt Programming goes one step further.

While Automated Prompting focuses on executing optimized prompts with code or workflows, Prompt Programming defines how the system itself generates and interprets prompts, shifting control from the human to the architecture.

At this level, the system no longer executes fixed prompt templates.

Instead, it uses parameters, schemas, and metrics to guide model behavior programmatically, often without exposing the underlying prompt at all.

This is where frameworks like dspy come in. They let developers define the intent and output structure rather than the exact phrasing of a prompt:

from dspy import Predict

class PolicySummarizer(Predict):

input = {"policy_text": str}

output = {"summary": str}

metric = "factual_consistency"

Here, the system interprets the developer’s specification, builds the underlying prompts, and evaluates outputs based on performance metrics.

You do not write the prompt; you describe the task.

The model architecture takes care of reasoning, chaining, and evaluation.

This approach is especially powerful for structured pipelines and agentic systems that need to reason autonomously or adapt prompts dynamically.

A hybrid approach often works best.

Even in DSPy-driven architectures, using tools like leo-prompt-optimizer at the design stage can help ensure complex output fields are framed correctly, improving consistency before full automation.

In short: Automated Prompting runs optimized prompts.

Prompt Programming designs systems that learn how to prompt themselves.

Engineering Reality Check

- Automating prompts does not eliminate the need for human evaluation; it amplifies the cost of a poorly designed one.

- Metrics like factual consistency or response stability depend on data quality as much as prompt structure.

- True prompt programming requires versioned evaluation datasets and consistent metric tracking, which are still rare in most enterprise setups.

The Progressive Spectrum of Prompting Maturity

In practice, mature GenAI systems often blend multiple levels:

Prompt galleries for governance, automated prompting for workflows, and prompt programming for autonomous reasoning.

The art lies not in climbing a hierarchy but in orchestrating these layers intentionally.

From Prompting to Prompt Architecture

Prompting is evolving from an art of communication into an architectural discipline.

The maturity journey follows two intertwined paths:

- Interface evolution: from chat UIs to code and system integration

- Control evolution: from human prompting to autonomous orchestration

Each organization will find itself at a different level depending on its GenAI maturity, resources, and product complexity. The key is not to skip steps but to design prompting structures that evolve from usability to scalability.

Final Thought

Prompting has always been about expressing intent, but its form is changing.

From ad-hoc experiments to automated and autonomous systems, it is becoming the connective tissue of AI architecture.

At Sailpeak, we see prompting as the new API layer between humans and intelligence.

Whether through chat interfaces, automation flows, or embedded systems, the principle remains the same:

What you say to the model defines what it can do for you.

The next chapter is not about prompting better, it is about building systems that prompt with purpose.

Our insights

Sailpeak helps organisations evolve their prompting practices from experimentation to scalable AI architecture. Our team supports you in building prompt galleries, automating context-rich workflows, and designing robust system-level prompting logic. Get in touch today, and we’ll help you transform prompting into a strategic layer of your GenAI capabilities.

-min.webp)